Aurora RDS (out) performs

For a little over 10 years, Amazon Web Services (AWS) has been continually innovating in the cloud infrastructure domain. Which we love, because here at Shine Products we get the opportunity to leverage these innovations to provide better outcomes for our clients in the Energy industry. Which we also love!

The introduction of Aurora RDS is just one of the many ways that AWS has once again delivered on that innovation by providing a fully managed database service with a list of performance optimisations at a competitive price that only AWS seem to be able to deliver. With such an appealing promise, we’ve been trying to see what this service can do for us.

The target for us was to shift one of our Oracle installations over to Aurora RDS with a Postgres engine. We chose Postgres as our engine over MySQL as many of our applications have batch processing components. Analysis using the AWS schema conversion tool (SCT) showed that the conversion process would be able to convert 100 percent of storage objects (i.e. tables) and 88 percent of database objects (i.e. procedures). I strongly recommend utilising the AWS SCT to provide a massive head-start on the database conversion process. From there the bulk of the work involving converting the remaining database objects, changing our applications hibernate configuration and modifying some of the hibernate queries.

AWS provide a helpful conversion page that can also be used for guidance of the kind of obstacles you may be up against at:https://aws.amazon.com/blogs/database/challenges-when-migrating-from-oracle-to-postgresql-and-how-to-overcome-them/

Our first round of testing was some quick user interface testing. The main issues we had centred around the use of date logic. Postgres is quite different to Oracle, but the good news is that once you work out what needs to change, you can usually perform the same updates to all your date logic quickly. In terms of front-end performance, our application is quite small with only small data sets being returned so the experience between Oracle and Aurora (Postgres) was approximately the same.

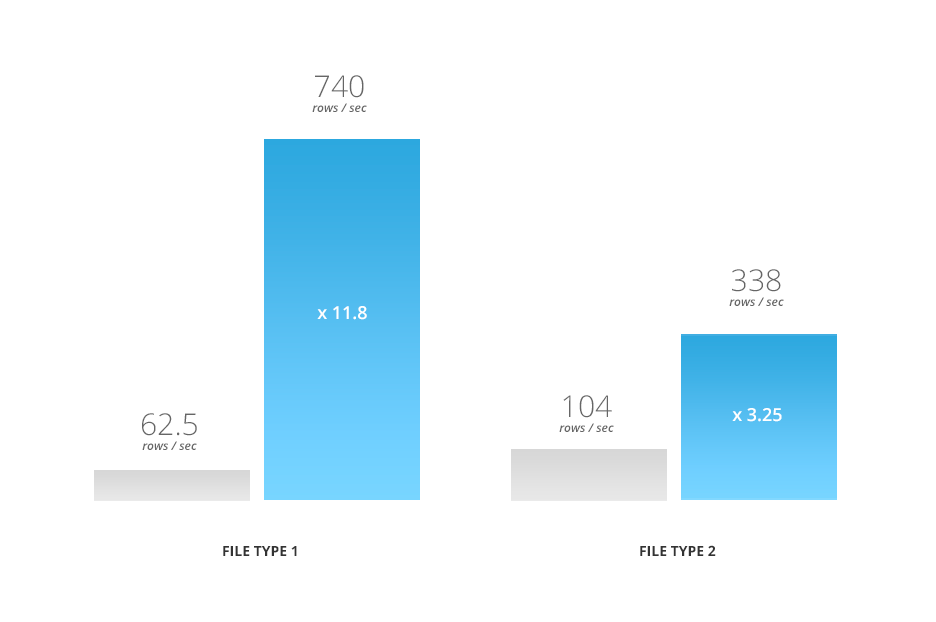

The next part of our test involved some file load processing. To ensure a valid test case, we selected an environment that was currently using a db.m3.large instance and compared it to an Aurora db.r4.large. AWS assure us that the Aurora Postgres database can be up-to three times faster than a standard Postgres database https://aws.amazon.com/rds/aurora/faqs/ so this was the first real chance to put that to the test.

The below comparisons detail the result of this test:

The last file type is the smallest file so it is not too surprising that the performance didn’t improve dramatically. The first file type on the other hand had about 350 times as much data and we could see a massive improvement in speed. It is likely higher for larger files in our case due to less overhead from the application itself.

Still, even these files don’t necessarily show off Aurora Postgres’ benefits as these files generally load in the background and don’t impact the user’s workflow. So, the final test was performed over the major batch run process. This process runs up to four times in a week and provides the full reconciliation between multiple sources of data. In Oracle, the process on average took between 12 and 14 hours. As our test case, we reran one of these processes that took 13 hours, again on the comparable database servers.

The results were startling. The Aurora Postgres enabled the system to complete our test case in a fraction under five hours. We had nearly tripled the performance of the key process within the application and the main reason for this was without question the database layer.

But performance isn’t the only success criteria. We validated 15 individual batch runs to compare the data produced for consistency and found that the data produced by Aurora Postgres was a complete duplicate of the original data produced under Oracle.

Our concerns that the handling of data types might introduce slight variances were proven to be unfounded. The SCT did an excellent job of converting the database structure. We also noticed that our current database runs at nearly 100 percent CPU during the key batch process. As the following charts demonstrate, the Aurora Postgres database consistently ran at an average of 20-40 percent with the occasional 80-90 percent spikes for short periods. This means that there may even be more room for the application to be modified to push the Aurora instance harder.

The Cost of Converting from Oracle to Aurora Postgres

What about the cost? It’s great that it appears to be faster, but there is a cost of converting the database to support an application. The following table lists the prices for On Demand RDS instances of Oracle and Aurora Postgres for the same instance types (db.r4.large):

| Database | Cost per hour |

| Oracle (Multi AZ),(licence included) | $1.128 US |

| Aurora (Multi AZ) | $0.35 |

We can see that the same Aurora instance type versus Oracle is roughly a third of the on-demand price, making the overall cost benefits associated with moving away from Oracle an enticing one.

Key Benefits

Despite this experiment being largely about ensuring we see an improvement in performance and reduction in cost, the Amazon Aurora service provides some extra benefits that are not currently available in Oracle RDS. Some of these are:

* Automatic size increment – no more managing disk space size.

* Maximum database size of 64 TB. The current Oracle hard limit is 16TB.

* Support for cross-region replicas.

* Performance insights at no extra cost.

Conclusion – Three Times Faster, Half the Cost

The results of our conversion have been extremely positive overall. Our application is now almost three times faster at nearly half the cost.

Aurora Postgres is still a relatively new service and we think there’s still room for improvement. The biggest blocker for us is the fact that you cannot use Amazon’s Data Migration Service (DMS) to replicate your data from Aurora Postgres into Redshift. The need for near real-time replication into data warehouses has become critical for many of our customers. If your organisation also has this requirement, you may want to consider remaining on your existing platform until AWS makes this capability available.

Not every app will be easy to convert to Aurora Postgres, especially if your application has lots of stored procedures, functions or Oracle packages. But if your applications usage characteristics lean towards a Postgres style database, then you should at least run the SCT report, which advises what kind of impediments you are up against.

Past experiences have shown us that AWS continue to innovate faster than other cloud providers and we’re very excited to see where AWS can take this service.